Robots and cobots: meet your new colleagues

First published (with edits from this version) in Health and Safety at Work Magazine, June 2019. Illustrations, 2020

As robots get to work in the manufacturing, construction, farming, warehouse, healthcare and retail sectors, chances are you’ll be meeting them soon. Bridget Leathley reports on the latest safety thinking.

Robots are on the rise

The International Federation of Robotics (IFR) reported that industrial robot sales across the world increased by nearly a third in 2017, followed by further growth in 2018, with the metal industry and the electrical and electronics manufacturing sector showing the largest growth. The automotive industry still remains the biggest customer for industrial robots, purchasing one third of the robots bought in 2017. In 2018, Europe increased its robot population by 7%, and America by 6%.

As Mike Wilson, Chair of the British Automation and Robot Association (BARA) explains, “Industrial robots have been in widescale use since the 1970s in the automotive industry. They have become more sophisticated over time, and the degree to which they can be re-programmed has improved flexibility of use.”

The growth of so-called “service” robots has been even greater. Defined by the International Organisation for Standardisation as a robot that “performs useful tasks for humans or equipment”, the IFR reports an 85% increase in sales of service robots in 2017. Much of this growth has been in the use of “logistics robots” – or automated guided vehicles – in non-manufacturing environments such as warehousing, distribution centres and hospitals. In addition, around 10% of the service robots sold in 2017 were apparently used in defence applications, including bomb investigation and de-mining.

Unexpected growth in agriculture

Another unexpected growth area is agriculture, with the IFR predicting sales increases of 20% per year from now until 2021. The dominant technology here is milking robots on dairy farms, although the IFR says that automated systems for crop, vegetable and fruit cultivation and harvesting are becoming established. Last year, the UK government pledged £90m to support new technology to underpin food production, including robotics for monitoring plant health, digging, planting and harvesting.

Simon Blackmore, professor at Harper Adams University and head of agricultural robotics, predicts both environmental and safety benefits to using robots in agriculture, suggesting that chemical usage could be cut by “up to 99%”. Speaking to Health and Safety at Work, he adds: “Robots can apply pesticides, herbicides and fertilisers more accurately, and without humans in the fields when substances are being applied. Cost savings for the farm and the benefits for the environment will come from a more targeted use of chemicals, and from more accurate harvesting.”

Other sectors using robots

In the care homes and hospital sector, medical robots and assistance robots – although representing a small share of the total market – also saw significant sales in 2017, with greater growth anticipated in the future. Service robots in areas such as cleaning, demolition, construction, inspection and maintenance are mentioned in the IFR report, although currently at low levels of adoption. Overall, between now and 2021 the IFR predicts industrial robot sales will grow by 14% per year, and service robots by 18% a year. In other words, robots are making rapid headway in multiple sectors, and could soon be a reality in your workplace.

In the March edition of Health and Safety at Work, Andrew Tyrer, head of enabling technologies at Innovate UK, highlighted a UK government fund of £93m to boost projects that put robots instead of people into dangerous environments, such as confined spaces, remote wind farms or even underwater. In such locations, there is often a productivity case as well as a safety case. Robots can be sent in at access points, finding their way through pipework underneath roads and buildings, removing the need to dig up roads.

Working in nuclear waste storage pools, underground or underwater, robots are unlikely to bump into human workers. However, as Tyrer points out, “the future growth opportunities lie in the free roaming, unguarded, working among, with and for us robot.”

Autonomous robots are already in use at historical nuclear sites in the UK. Melanie Brownridge, head of technology at the Nuclear Decommissioning Authority, explains they can deal with “highly radioactive areas where it’s too hazardous for our workforce to spend any length of time, even in protective suits. Robots reduce the risk to workers significantly and can often carry out tasks faster, while avoiding additional waste such as contaminated clothing.”

Robots that can replace a security guard walking alone around a site at night already exist. One person can monitor the video feeds from several robots, and the robot will send an alert when it identifies a human presence, an open door, or an object in the wrong place.

What is a robot? And what is a cobot?

The image in our minds when we hear the word “robot” typically depends on the science fiction we consumed as children. Be it C-3PO in Star Wars, Kryten in Red Dwarf or Wall-E in the eponymous film, we probably picture something with a face, eyes and arms, and either wheels or legs to move around.

The reality, so far, has been less inspiring. One definition of a robot from Gill Pratt, chief executive at the Toyota Research Institute, is that “it senses, and it thinks, and it acts”, a definition so broad it could include a thermostat or a sensor in a washing machine. A second element to his definition of a robot is that it “does dull, dirty and dangerous tasks – things that really human beings shouldn’t have to spend time and effort on”. That definition is clearly an appealing one for safety managers.

The IFR’s definition of a robot is drawn from ISO 8373:2012 Robots and robotic devices, which offers definitions for several terms, including industrial robot; robot systems; robot cells; mobile robots; and legged robots. In the ISO’s definition, an industrial robot is an “automatically controlled, reprogrammable, multipurpose manipulator programmable in three or more axes”. In other words, it can be programmed to do new tasks without needing physical alterations, and it has some degree of autonomy, being able to perform tasks without human intervention.

Traditionally, industrial robots, of the kind familiar in the automotive industry, stay in one place behind a barrier, whereas service robots are designed to share a space with people, and are used in conjunction with a trained operator.

However, the distinction is becoming blurred as we now have a third category, the “collaborative robot”. The term is sometimes used for any robot that is not behind a physical barrier, but ISO 15066: 2016 Robots and robotic devices. Collaborative robots is clear that collaboration applies only when “the robot system and a human can perform tasks concurrently during production operation”.

German robotics researcher Wilhelm Bauer, of the Fraunhofer Institute for Industrial Engineering, outlines the stages that precede true collaboration. First, there is coexistence between workers and robots, where humans and robots work near each other on separate tasks but avoid getting too close. In the next step, synchronisation and co-operation move closer to full collaboration by allowing humans and robots to work more closely, but still in series, rather than in parallel.

An example of true collaboration, where human and robot workers cooperate on parallel tasks, is at Ford’s assembly plant in Cologne, Germany, where robots lift heavy parts into position, while humans carry out the more dextrous work on the same piece of equipment at the same time. A key factor is that true “cobots” can respond to their surroundings, instead of merely repeating a taught sequence of positions; for example, adjusting the angle at which they hold something to accommodate the human worker.

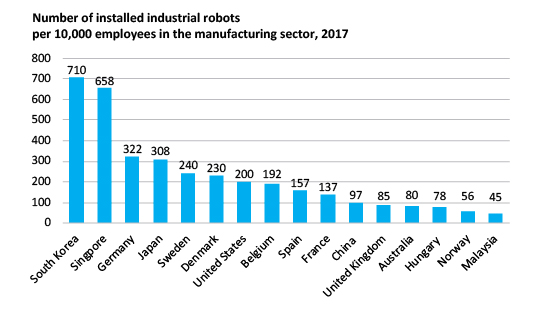

The International Federation of Robotics (IFR) has devised the concept of “robot density”, measuring the number of operational industrial robots relative to the number of employees according to OECD data, as an objective measure of the take-up of robot technology.

In its report, World Industrial Robotics 2018, which spans the automotive sector and other manufacturing industries, the IFR calculates the global average number of industrial robots per 10,000 employees as 85. In Europe, the average was 106, while the Americas had a density of 91 and Australasia/Asia had a density of 75. However, the report also found that Asia had the highest growth rate, with density increasing by around 12% a year between 2012 and 2017.

Robot density in the United Kingdom exactly matches the global average of 85, but this is made up of a density of only 42 in general factories, and 651 in the automotive sector.

However, the IFR says that this figure is low in relation to other car manufacturing economies. South Korea, for instance, has 2,435 robots per 10,000 workers in its car factories. The report links this high number, and a dramatic increase in investment during 2017, to the drive by manufacturers to build batteries for hybrid and electric cars.

Sales of robots in the UK did increase in 2017, but the IFR notes that this followed three years of reduced investment, lasting from 2014 to 2016.

Britain is lagging behind the rest of the world, according to IFR

Free range robots

Typically, installing industrial robots needs a major redesign of the work space, with large guarded spaces dedicated to the robots. Free roaming robots can be integrated into existing workplaces with little physical redesign beyond installing charging points. Vendors boast how easily they can be set up and operated, and quickly modified for new tasks. The fact that safety guarding is not required saves both cost and floor space. But when robots and humans work in the same space, how can you keep people safe?

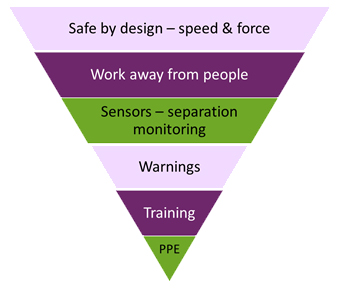

Any robot that is likely to come into contact with humans, whether free roaming or truly collaborative, has to be “safe by design” – the risk control placed at the top of the control hierarchy. In other words, a robot should not be able to move with such speed and force that it could crush a person. At Harper Adams, Blackmore explains that agricultural robots have a “collapsible bumper which will take the force of any collision and then stop the robot from moving until an operator checks and resets it”.

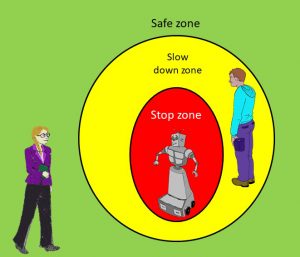

Coexistent (rather than truly collaborative) robots are able to come out from behind their physical barriers, or in some cases their light curtains, and work amongst human colleagues because they incorporate sensor technology that means they can keep their distance from human users.

Universal Robots, a Danish manufacturer of cobots, offers the following definitions of the distance controls in place:

- the safe zone for human workers – anywhere that is completely out of reach of the robot;

- the slow down zone – areas near the robot where there is no immediate danger to humans, but close enough to cause the robot to slow down;

- the stop zone – areas where harm to human workers is sufficiently imminent that the robot stops.

These zones must take account not just of the movement of the robot, but also of the stretch of any of its limbs or attachments, and of any tools or products that the robot might be holding. Depending on the task, the zones might also need to consider an ejection region – for example, if a robot is drilling or cutting, and projectiles could harm people in a larger area.

Discussing agricultural robots used for cultivating or harvesting crops, Blackmore describes how a single human operator might be able to remotely guide and oversee perhaps six different robotic arrays on six separate fields. To protect any humans or livestock that stray towards it, he explains that the robots, which would be smaller than a typical tractor, “use Lidar [light detection and ranging systems] to detect obstacles in front, and 12 LED systems to create a safety curtain around the vehicle.”

For cobots, where safety by distance is not practical, safe by design is critical. Anna Pavan of cobot supplier Acrovision explains their approach to ‘safe by design’. “We identify hazards and determine controls early in the design process, to eliminate or minimise the risk of harm throughout the construction and life of the product. This is more effective than identifying safety hazards after the robot is built, and then adding on guards such as physical barriers or enclosures. Safe by design removes the need for additional safety features later. If any failures occur, the robots go into safe mode.”

In the hierarchy of control, training is near the bottom, but educating workers who need to work around free moving robots is essential. If some robots are collaborative and others need to maintain separation, workers need to know the difference. Colour coding is sometimes used to distinguish between those robots that are safe to approach, and those which will stop if you get too close.

Under the Machinery Directive (2006/42/EC) a robot is “partly completely machinery” and as such does not have its own CE marking. The organisation undertaking the final incorporation or assembly process becomes the manufacturer of the complete product, and is responsible for its risk assessment. For traditional industrial robots, professional systems integrators are used, who, according to Mike Wilson, generally understand the controls needed in a production environment. However, he highlights one area of potential risk. “Free roaming robots are sometimes bought by smaller companies, without experience of robotic risk assessments. They might be easy to program, and that presents a challenge. Companies have to be sure that the staff who reprogram the robots know what they are doing,” he advises.

The law

To respond flexibly to their environments, collaborative robots need a narrow form of artificial intelligence (AI), something that barrister Jacob Turner has written about in his recent book Robot rules: regulating artificial intelligence. But the use of AI, which of course is based on code and algorithms written by very human hands, raises the question of who takes responsibility if the robot, and the algorithms behind it, causes harm to a human worker.

Many technology journalists refer to the three laws of robotics coined by American science fiction writer Isaac Asimov. These “laws” are sometimes viewed as if they were indeed intended as the basis of regulation, but Turner points out that these laws “were written as science fiction and were always intended to lead to problems”.

Speaking at a meeting of the Oxford AI Society in February, Turner set out his view that lawmakers should not wait for robots to get smarter before putting regulations in place, but also argued that society should embrace the opportunities AI presents. “We are in danger of oscillating between the complacency of the optimists and the craven scruples of the pessimists. AI presents incredible opportunities for the benefit of humanity and we do not wish to fetter or shackle this progress unnecessarily.”

However, others argue that today’s legal framework can flex to the requirements of an AI-enabled future. Today, as with any other machine or vehicle, a court of law will examine the facts surrounding an accident and can assign responsibility to an employee, the employer who trained and supervised them, the integrator or installer, or the manufacturer.

Nick McMahon, head of health and safety at law firm RPC, believes that this flexibility will offer adequate protection in the future. “The concept of reasonable practicability is likely to remain fit for purpose. The challenge for industry and regulator is going to be in assessing the risk accurately, rather than in developing legislation. As new technologies develop, so should analysis of trends and emerging or predicted risks of their use,” he suggests.

At a meeting of the Oxford AI Society in February 2019, Turner set out his view that lawmakers should not wait for robots to get smarter before putting regulations in place. “We are in danger of oscillating between the complacency of the optimists and the craven scruples of the pessimists. AI presents incredible opportunities for the benefit of humanity and we do not wish to fetter or shackle this progress unnecessarily.”

Speaking at the same event, Jade Leung, Head of Research and Partnerships at the Center for the Governance of AI (Oxford) cast doubt on regulators’ ability to cope. According to Leung, “States are ill equipped to govern AI in isolation. States have national boundaries, but emerging technologies and global businesses don’t. Technology often moves too fast for regulation, and the governance of emerging technologies requires expertise which governments don’t tend to have in house.”

First law – A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Second law – A robot must obey orders given to it by human beings except where such orders would conflict with the first law

Third law – A robot must protect its own existence as long as such protection does not conflict with the first or second laws.

Isaac Asimov’s Three laws of robotics (1942)

In the UK, the HSE is closely watching robotics and autonomous systems, which it refers to as RAS. The regulator’s latest Foresight report reminds us, if we needed reminding, that “where RAS and people share the same workspace, the integrity of both health and safety-related control systems is of major importance”.

ISO is further ahead, with a technical committee focusing on robot safety. ISO 15066:2016 deals specifically with the safety requirements for collaborative robot systems covering hazard identification, risk elimination and passive and active risk reduction.

At BARA, Wilson sums up how robots and people should work together. “Robots are better at being robots. They can work fast, consistently, and carry more weight. They don’t get tired or bored. They can do the sort of jobs that people shouldn’t be doing in this day and age,” he says.

Although robots have become better at sensing, as the BARA website explains in “When not to use robots”, humans “have an impressive arrange of sensors (touch, vision, hearing, pattern recognition.” BARA also mentions that humans are better at learning, and at making decisions where data is missing. Perhaps tongue-in-cheek, BARA suggest humans are “flexible and easy to program.”

Asimov’s literary intentions were to make robots dangerous and threatening. Our role as health and safety practitioners is to make workplaces safer and healthier. If we are to replace Asimov’s vision with our own, we must ensure we understand the technology well enough to take a sensible and proportionate approach to risk management.